Nuclear Destruction

The Nazi death camps and the mushroom cloud of nuclear explosion are the two most potent images of the mass killings of the twentieth century. As World War II ended and the cold war began, the fear of nuclear annihilation hung like a cloud over the otherwise complacent consumerism of the Eisenhower era. The new technologies of mass death exacted incalculable costs, draining the treasuries of the United States and the Soviet Union and engendering widespread apocalyptic fatalism, distrust of government, and environmental degradation.

The advent of nuclear weapons fundamentally altered both the nature of war and the relationship of the military with the rest of society. A 1995 study by John Pike, the director of the space policy project at the Federation of American Scientists, revealed that the cost of nuclear weapons has constituted about one-fourth to one-third of the entire American military budget since 1945. President Eisenhower, in his farewell speech on the threats posed by the "military-industrial complex," warned of the potential of new technology to dominate the social order in unforeseen ways. The "social system which researches, chooses it, produces it, polices it, justifies it, and maintains it in being," observed British social historian E. P. Thompson, orients its "entire economic, scientific, political, and ideological support-system to that weapons system" (Wieseltier 1983, p. 10).

Throughout the cold war, U.S. intelligence reports exaggerated the numbers and pace of development of the Soviet Union's production of bombs and long-range nuclear forces, thus spurring further escalations of the arms race and the expansion of the military-industrial complex. In the 1950s there was the bomber gap, in the 1960s it was the missile gap, in the 1970s the civilian defense gap, and in the 1980s the military spending gap. The Soviet Union and the members of the NATO alliance developed tens of thousands of increasingly sophisticated nuclear weapons and delivery systems (e.g., cannons, bombers, land- and submarine-based intercontinental ballistic missiles) and various means of protecting them (e.g., hardened underground silos, mobile launchers, antiballistic missiles). With the possible exception of the hydrogen bomb, every advance in nuclear weaponry—from the neutron bomb and X-ray warheads to the soldier-carried Davy Crockett fission bomb—was the product of American ingenuity and determination.

A Brief History of Cold War Nuclear Developments

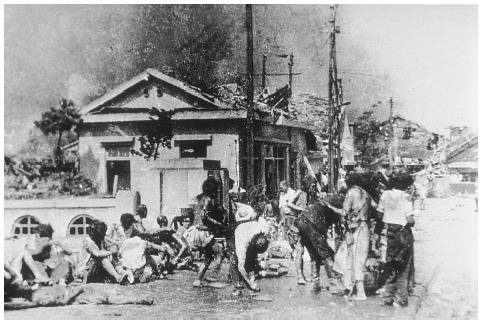

During World War II, while German research resources were largely invested in developing the V-1 and V-2 guided missiles, similar investments were being made by the United States and selected allies in producing the ultimate bomb through the highly secret Manhattan Project. The first nuclear device was detonated before dawn on July 16, 1945, at the Alamogordo Test Range in south central New Mexico. Within two months, atomic bombs were dropped on the Japanese cities of Hiroshima and Nagasaki. The U.S. government claimed that these bombings were necessary to shorten the war and avoid the anticipated heavy casualties of a land invasion of Japan, but later revisionist historians have disputed that motivation, claiming rather that the blasts were intended as an advertisement of American power over the Soviet Union.

The United States continued to develop the new weaponry despite the fact the war had concluded. Whether this was the reason why the Soviet Union embarked on its own weapon program, or if it would have done so if the U.S. had ceased production, remains a matter of debate. The Soviets deeply feared that the United States, having demonstrated its willingness to use the weapon on civilian populations, might not hesitate to do so again during the cold war. Two weeks after Hiroshima's destruction, Stalin ordered a crash program to develop an atomic bomb using Gulag prisoners to mine uranium and construct weapons facilities, putting the needs of his people behind those of the bomb. The race for nuclear supremacy had begun.

The 1940s saw the dawn of the cold war: the Soviet blockade of Berlin, Mao's victory over the Nationalists in China, discoveries and accusations of espionage, and, in September 1949, evidence that the Russians had tested their own bomb. Major General Curtis LeMay, head of the newly formed Strategic Air Command, was ordered to prepare his Air Force unit for possible atomic attack. His first war plan, based on a concept called "killing a nation," involved attacking seventy Soviet cities with 133 atomic bombs.

Fears of imminent nuclear war swept the globe. President Truman believed that if the Russians had the bomb, they would use it. The physicist Edward Teller pushed for a thermonuclear weapon whose virtually unlimited power would dwarf the atomic bombs produced under the Manhattan Project. The "Super," as it would be called, was the hydrogen bomb. In January 1950 Truman approved its development. Five months later, North Korea, with Stalin's support, attacked South Korea. Later that year, when in retreat, the North Koreans were reinforced by another Russian ally, Communist China. The cold war was in full swing, and the climate of fear and suspicion fueled McCarthyism. In 1952 the first hydrogen bomb was detonated, releasing a force some 800 times greater than the weapon that had destroyed Hiroshima. The bomb, initially sixty-two tons, was later made smaller and lighter, allowing its placement on missiles.

It was then that President Eisenhower's Secretary of Defense, John Foster Dulles, presented the impression that the United States would instigate nuclear war if there were any communist encroachments upon the "free world." Peace was maintained through the deterrent of fear: "Mutually Assured Destruction" (MAD) became the principle nuclear strategy for the rest of the twentieth century, making it inconceivable that politicians would risk the destruction of the planet by actually deploying the weapons they were so busily and alarmingly developing and stockpiling. To ensure retribution following a first strike, stockpiles continued growing to the point where human populations could be killed many times over.

Nuclear anxieties intensified with the development of strategic intercontinental rockets capable of delivering a nuclear warhead anywhere in the world within minutes. Because atomic war would basically be a one-punch affair, the alacrity and thoroughness of the first strike became the preoccupation of strategic planners. The race between the United States and Russia to refine German rocket technology intensified during the 1950s. When Russia launched the first satellite, Sputnik, in 1957, Americans panicked at the thought of Soviet hardware overhead and its ability to drop weapons from orbit. To recoup lost face and bolster national confidence, the United States entered the space race with its own orbital missions and even considered a plan to detonate a Hiroshima-size nuclear bomb on the moon that would be visible to the naked eye. In 1961 the Soviets placed the first man, Yuri Gargarin, into orbit as the nuclear-arms race combined with the space race as the key instruments of cold war rivalry between the Soviet Union and the United States.

But the critical event of the 1960s was the 1962 discovery that Russians had deployed forty-eight offensive ballistic missiles in Cuba. In a showdown of nuclear brinkmanship, both the Soviet Union and the United States went on highest alert in their preparations for war. For thirteen days the cold war almost went hot. As the Russian nuclear missiles were nearing operational status the Kennedy administration weighed such options as mounting an air strike, staging an invasion, or conducting a naval blockade. After the latter was selected, a Russian fleet steamed west to break it; a U.S. spy plane was shot down over Cuban territory killing the pilot. Eventually, though, diplomacy and level heads prevailed. The missiles were removed in exchange for a U.S. pledge not to invade the communist country and to remove its obsolete Jupiter missiles from Turkey. The nations' closeness to the unthinkable contributed to their agreeing on the 1963 Nuclear Test Ban Treaty.

There was one final peaking of fears and expenditures before the collapse of the Soviet Union: the entry of another communist superpower on the nuclear game board. China, which had detonated its first atomic bomb in 1964, claimed thirteen years later to have successfully tested guided missiles with nuclear warheads. Reports surfaced of nuclear shelters being constructed in Manchuria. The 1970s concluded with six members in the nuclear club and with memories associated with the seventy-fifth anniversary of the beginning of World War I and how an unpredictable chain of events could set into motion unwanted global conflict.

In 1982 President Reagan unilaterally discontinued negotiations for a comprehensive test ban of nuclear weapons, echoing the military's claims of a "testing gap" with the Soviet Union. In fact, as of the beginning of 1985, the United States had over the previous four decades (since 1945) conducted some 200 more nuclear tests than had the Soviets. The President proposed the Strategic Defense Initiative, popularly known as "Star Wars," to protect the nation from missile attack by using exotic technologies that were still on the drawing board. Pure scientific research in physics, lasers, metallurgy, artificial intelligence, and dozens of other areas became largely focused on direct military uses. By the mid-1980s, 70 percent of American programs in research and development and testing and evaluation were defense-related, and nearly 40 percent of all U.S. engineers and scientists were involved in military projects.

Compounding public anxieties was a 1982 forecast by U.S. intelligence agencies that thirty-one countries would be capable of producing nuclear weapons by 2000. From the scientific community came highly publicized scenarios of a postwar "nuclear winter," possibly similar to conditions that led to the extinction of dinosaurs following the impact of an asteroid. Groups such as Physicians for Social Responsibility warned that such a conflict would lead to the return to the Dark Ages. Books like Jonathan Schell's The Fate of the Earth (1982) and media images such as ABC television's special The Day After (1983) produced a degree of public unease not seen since the 1962 Cuban Missile Crisis.

The collapse of the Soviet Union and the end of the cold war in the late 1980s did not conclude American research and development—nor fears of a nuclear holocaust. In Russia, equipment malfunctions have accidentally switched Russian nuclear missiles to a "combat ready" status, and deteriorating security systems have increased the likelihood of weapons-grade materials falling into the hands of rogue states and terrorists. In the United States, major military contractors sought long-term sources of revenue to compensate for their post–cold war losses, and Republicans continued pushing for a defensive missile shield. In the mid-1990s the Department of Energy approved expenditures of hundreds of millions of dollars for superlasers and supercomputers to simulate weapons tests. A sub-critical nuclear weapons test, called Rebound, was conducted in 1997 at the Nevada Test Site. At the beginning of President George W. Bush's term, the 2001 Defense Authorization Bill was passed requiring that the Energy and Defense Departments study a new generation of precision, low-yield earth penetrating nuclear weapons to "threaten hard and deeply buried targets."

The Proliferation

Through espionage, huge national investments, and a black market of willing Western suppliers of needed technologies and raw materials, the American nuclear monopoly was broken with the successful detonations by the Soviet Union (1949), the United Kingdom (1952), France (1960), China (1964), India (1974), and Pakistan (1998).

Although the Western allies made the Soviet Union the scapegoat for the proliferation of nuclear weapons, it has been the export of Western technology and fuel that has given other countries the capability of building their own bombs. Although publicly dedicated to controlling the proliferation of "the bomb," in the fifty years following the Trinity detonation the United States shipped nearly a ton of plutonium to thirty-nine countries, including Argentina, India, Iran, Iraq, Israel, Japan, New Zealand, Pakistan, South Africa, Sweden, Turkey, Uruguay, and Venezuela.

The countries suspected of having (or having had) nuclear weapons programs include Iraq, Romania, North Korea, Taiwan, Brazil, Argentina, and South Africa. There is little doubt that the sixth member of the nuclear club is Israel, which was supplied a reactor complex and bomb-making assistance by the French as payment for its participation in the 1956 Suez Crisis. Despite its concerns over nuclear proliferation, the United States looked the other way as the Israeli nuclear program progressed, owing to the country's strategic position amid the oil-producing countries of the Middle East. When a Libyan airliner strayed over the highly secretive Negev Nuclear Research Center in 1973, Israeli jets shot it down, killing all 104 passengers.

Living with the Bomb

In By the Bomb's Early Light (1985) Paul Boyer asks how a society lives with the knowledge of its capacity for self-destruction. However such thinking was in vogue with the approach of the West's second millennium, with the media saturated with doomsday forecasts of overpopulation, mass extinctions, global warming, and deadly pollutants. By the end of the 1990s, half of Americans believed that some manmade disaster would destroy civilization.

The real possibility of nuclear war threatens the very meaning of all personal and social endeavors, and all opportunities for transcendence. In 1984, to dramatize the equivalency of nuclear war with collective suicide, undergraduates at Brown University voted on urging the school's health service to stockpile "suicide pills" in case of a nuclear exchange.

Such existential doubts were not mollified by the government's nuclear propaganda, which tended to depict nuclear war as a survivable natural event. Americans were told that "A Clean Building Seldom Burns" in the 1951 Civil Defense pamphlet "Atomic Blast Creates Fire," and that those who fled the cities by car would survive in the 1955 pamphlet "Your Car and CD [civil defense]: 4 Wheels to Survival."

Not even the young were distracted from thinking about the unthinkable. Civil defense drills became standard exercises at the nation's schools during the 1950s, including the "duck and cover" exercises in which students were instructed to "duck" under their desks or tables and "cover" their heads for protection from a thermonuclear blast. In the early 1980s, a new curriculum unit on nuclear war was developed for junior high school students around the country. Psychologists wrote about the implications of youngsters expecting never to reach adulthood because of nuclear war.

The Impacts of the Nuclear Arms Race on Culture and Society

In the words of Toronto sociologist Sheldon Ungar, "Splitting the atom dramatically heightened the sense of human dominion; it practically elevated us

Scholars and essayists have speculated liberally on the psychological and cultural effects of growing up with the possibility of being vaporized in a nuclear war. For instance, did it contribute to permissive parenting strategies by older generations seeking to give some consolation to their children? Or the cultural hedonism and dissolution of mores evidenced when these children came of age? It certainly did contribute to the generational conflicts of the 1960s, as some baby boomers laid blame for the precarious times on older generations.

It is in the arts that collective emotions and outlooks are captured and explored, and fears of the atomic unknown surfaced quickly. As nuclear weapons tests resumed in Nevada in 1951, anxieties over radioactive fallout were expressed cinematically in a sci-fi genre of movies featuring massive mutant creatures. These were to be followed by endof-the-world books (e.g., Alas, Babylon in 1959), films (e.g., On the Beach in 1959, Fail-Safe in 1964, Dr. Strangelove in 1964), television series Planet of the Apes, and music (e.g., Bob Dylan's "Hard Rain" and Barry McGuire's "Eve of Destruction").

The bomb also opened the door to UFOs. In the same state where the first nuclear bomb exploded two years earlier, near Roswell, New Mexico, an alien space ship supposedly crashed to Earth, although later reports have debunked that story despite the stubborn beliefs in an alien visitation by various UFO aficionados. Were they contemporary manifestations of angels, messengers carrying warnings of humanity's impending doom? Or did our acquisition of the ultimate death tool make our neighbors in the cosmos nervous? The latter idea was the theme of the 1951 movie The Day the Earth Stood Still, where the alien Klaatu issued an authoritarian ultimatum to earthlings to cease their violence or their planet will be destroyed.

Harnessing the Atom for Peaceful Purposes

The logic of the death-for-life tradeoff runs deep throughout all cultural systems. It is a price we see exacted in the natural order, in the relationship between predator and prey, and in the economic order in the life-giving energies conferred by fuels derived from the fossils of long-dead animals. Over 80 percent of American energy comes from coal, oil, and gas, whose burning, in turn, produces such environment-killing by-products as acid rain.

This logic extends to attempts to harness nuclear energy for peacetime uses. In theory, such energy can be virtually limitless in supply. In the words of the science writer David Dietz, "Instead of filling the gasoline tank of your automobile two or three times a week, you will travel for a year on a pellet of atomic energy the size of a vitamin pill. . . . The day is gone when nations will fight for oil . . ." (Ford 1982, pp. 30–31). But it is a Faustian bargain because the by-products, most notably plutonium, are the most lethal substances known to man. Thousands of accidents occur annually in America's commercial nuclear plants. Collective memory remains vivid of the meltdown at the Chernobyl nuclear plant in the former Soviet Union in 1986 and the 1979 accident at Three Mile Island in Pennsylvania.

The Ecological Legacy

A comprehensive 1989 study by Greenpeace and the Institute for Policy Studies estimated that at least fifty nuclear warheads and 9 nuclear reactors lie on ocean floors because of accidents involving American and Soviet rockets, bombers, and ships. Radiation leaks south of Japan from an American hydrogen bomb accidentally dropped from an aircraft carrier in 1965. In the 1990s equipment malfunctions led to Russian missiles accidentally being switched to "combat mode," according to a 1998 CIA report.

The cold war rush to build nuclear weapons in the 1940s and 1950s led to severe contamination of the land and air. In one 1945 incident at the 560-square-mile Hanford nuclear reservation in Washington State, over a ton of radioactive material of roughly 350,000 to 400,000 curies (one curie being the amount of radiation emitted in a second by 1,400 pounds of enriched uranium) was released into the air. There, the deadly by-products of four decades of plutonium production leaked into the area's aquifer and into the West's greatest river, the Columbia. Fish near the 310-square-mile Savannah River site, where 35 percent of the weapons-grade plutonium was produced, are too radioactive to eat. Federal Energy Department officials revealed in 1990 that 28 kilograms of plutonium, enough to make seven nuclear bombs, had escaped into the air ducts at the Rocky Flats weapons plant near Denver.

Such environmental costs of the cold war in the Untied States are dwarfed by those of the former Soviet Union. In the early years of their bomb program at Chelyabinsk, radioactive wastes were dumped into the Techa River. When traces showed up 1,000 miles away in the Arctic Ocean, wastes were then pumped into Karachay Lake until the accumulation was 120 million curies—radiation so great that one standing on the lake's shore would receive a lethal dose in an hour.

The Corruption of Public Ethics

Perhaps even more devastating than the environmental damage wrought by the nuclear arms race was its undermining of public faith in government. Public ethics were warped in numerous ways. Secrecy for matters of national security was deemed paramount during the cold war, leaving Americans unaware of the doings of their government. The secrecy momentum expanded beyond matters of nuclear technologies and strategies when President Truman issued the first executive order authorizing the classification of nonmilitary information as well.

Some instances of severe radioactivity risk were kept secret from the public by federal officials. Thousands of workers—from uranium miners to employees of over 200 private companies doing weapons work—were knowingly exposed to dangerous levels of radiation. Though many of these firms were found by various federal agencies to be in violation of worker safety standards set by the Atomic Energy Commission, there were no contract cancellations or penalties assessed that might impede the pace of weapons production. After three decades of denials and fifty-seven years after the Manhattan Project began processing radioactive materials, the Federal government finally conceded in 2000 that nuclear weapons workers had been exposed to radiation and chemicals that produced cancer in 600,000 of them and early death for thousands of others.

The dangers extended well beyond atomic energy workers; ordinary citizens were exposed to water and soil contaminated toxic and radioactive waste. From 1951 to 1962, fallout from the Atomic Energy Commission's open-air nuclear blasts in the Nevada desert subjected thousands to cancer-causing radiation in farm communities in Utah and Arizona. According to a 1991 study by the International Physicians for the Prevention of Nuclear War, government officials expected this to occur but nevertheless chose this site over a safer alternative on the Outer Banks of North Carolina, where prevailing winds would have carried the fallout eastward over the ocean. The study predicted that 430,000 people will die of cancer over the remainder of the twentieth-century because of their exposures, and millions more will be at risk in the centuries to come.

According to a 1995 report of the Advisory Committee on Human Radiation Experiments, between 1944 and 1974 more than 16,000 Americans were unwitting guinea pigs in 435 documented radiation experiments. Trusting patients were injected with plutonium just to see what would happen. Oregon prisoners were subjected to testicular irradiation experiments at doses 100 times greater than the annual allowable level for nuclear workers. Boys at a Massachusetts school for the retarded were fed doses of radioactive materials in their breakfast cereal. And dying patients, many of whom were African Americans and whose consent forms were forged by scientists, were given whole-body radiation exposures.

Conclusion

Nuclear anxieties have migrated from all-out war among superpowers to fears of nuclear accidents and atomic attacks by rogue nations. According to Valentin Tikhonov, working conditions and living standards for nuclear and missile experts have declined sharply in post–Communist Russia. With two-thirds of these employees earning less than fifty dollars per month, there is an alarming temptation to sell expertise to aspiring nuclear nations. During its war with Iran, Iraq in 1987 tested several one ton radiological weapons designed to shower radioactive materials on target populations to induce radiation sickness and slow painful deaths. And during May of 1998, two bitter adversaries, India and Pakistan, detonated eleven nuclear devices over a three-week period.

Some believe that with the advent of nuclear weapons, peace will be forever safeguarded, since their massive use would likely wipe out the human race and perhaps all life on Earth. Critics of this outlook have pointed out that there has never been a weapon developed that has not been utilized, and that the planet Earth is burdened with a store of some 25,000 to 44,000 nuclear weapons.

See also: Apocalypse ; Disasters ; Extinction ; Genocide ; Holocaust ; War

Bibliography

Associated Press. "16,000 Now Believed Used in Radiation Experiments." San Antonio Express-News, 18 August 1995, 6A.

Boyer, Paul. By the Bomb's Early Light: American Thought and Culture at the Dawn of the Atomic Age. New York: Pantheon, 1985.

Broad, William J. "U.S. Planned Nuclear Blast on the Moon, Physicist Says," New York Times, 16 May 2000, A17.

Broad, William J. "U.S., in First Atomic Accounting, Says It Shipped a Ton of Plutonium to 39 Countries." New York Times, 6 February 1996, 10.

"Brown Students Vote on Atom War 'Suicide Pills.'" New York Times, 11 October 1984.

Ford, Daniel. The Cult of the Atom: The Secret Papers of the Atomic Energy Commission. New York: Simon and Schuster, 1982.

Institute for Energy and Environmental Research and the International Physicians for the Prevention of Nuclear War. Radioactive Heaven and Earth: The Health and Environmental Effects of Nuclear Weapons Testing In, On and Above the Earth. New York: Apex Press, 1991.

Leahy, William D. I Was There: The Personal Story of the Chief of Staff to Presidents Roosevelt and Truman Based on His Notes and Diaries. New York: Whittlesey House, 1950.

Maeroff, Gene I. "Curriculum Addresses Fear of Atom War." New York Times, 29 March 1983, 15, 17.

Maloney, Lawrence D. "Nuclear Threat through Eyes of College Students." U.S. News & World Report, 16 April 1984, 33–37.

Rosenthal, Andrew. "50 Atomic Warheads Lost in Oceans, Study Says." New York Times, 7 June 1989, 14.

Subak, Susan. "The Soviet Union's Nuclear Realism." The New Republic, 17 December 1984, 19.

Tikhonov, Valentin. Russia's Nuclear and Missile Complex: The Human Factor in Proliferation. Washington, DC: Carnegie Endowment for International Peace, 2001.

Toynbee, Arnold. War and Civilization. New York: Oxford University Press, 1950.

Ungar, Sheldon. The Rise and Fall of Nuclearism: Fear and Faith As Determinants of the Arms Race. University Park: Pennsylvania State University Press, 1992.

Welsome, Eileen. The Plutonium Files: America's Secret Medical Experiments in the Cold War. New York: Dial Press, 1999.

Werth, Alexander. Russia at War. New York: E. P. Dutton, 1964.

Internet Resources

Department of Energy. "Advisory Committee on Human Radiation Experiments: Final Report." In the Office of Human Radiation Experiments [web site]. Available from http://tis.eh.doe.gov/ohre/roadmap/achre/report.html .

Jacobs, Robert A. "Presenting the Past Atomic Café as Activist Art and Politics." In the Public Shelter [web site]. Available from www.publicshelter.com/main/bofile.html .

Public Broadcasting System. "The American Experience: Race for the Super Bomb." In the PBS [web site]. Available from www.pbs.org/wgbh/amex/bomb/index.html .

Wouters, Jørgen. "The Legacy of Doomsday." In the ABCnews.com [web site]. Available from http://abcnews.go.com/sections/world/nuclear/nuclear1.html .

MICHAEL C. KEARL